TikTok has released an update to its community safety developed to protect teenager TikToker, following a major report about community users interaction with the potentially harmful challenge and hoaxes.

TikTok launched a global survey project a few months ago into this topic, which included a survey of more than 10,000 teens, parents and teachers from Argentina, Australia, Brazil, Germany, Italy, Indonesia, Mexico, the U.K., the U.S. and Vietnam. It also commissioned an independent safeguarding agency, Praesidio Safeguarding, to write a report detailing the findings and its recommendations. A panel of 12 leading teen safety experts were additionally asked to review the report and provide their own input. Finally, TikTok partnered with Dr. Richard Graham, a clinical child psychiatrist specializing in healthy adolescent development, and Dr. Gretchen Brion-Meisels, a behavioral scientist specializing in risk prevention in adolescence, to offer further guidance.

How TikToker engaged with disturbing challenges and harmful hoaxes, including self-harm or suicide

The data the report uncovered is worth examining, as it speaks to how social media can be a breeding ground for harmful content, like these viral challenges, because of how social platforms are so heavily used by young people. And young people have a much larger appetite for risk due to where they are in terms of their psychological development.

As Dr. Graham explained, puberty is this extraordinary period that works to prepare a child to transition to adult life. It’s a time of “massive brain development.

There is a lot of focus now on understanding why teens do the things they do because those judgment centers are being revised again in preparation for more complex decision-making and thinking in the future. Sometimes they’re going to bite off more than they can chew, and have an experience that in a way traumatizes them at least in the short-term, but the teens’ aspiration is to grow.

Dr. Graham

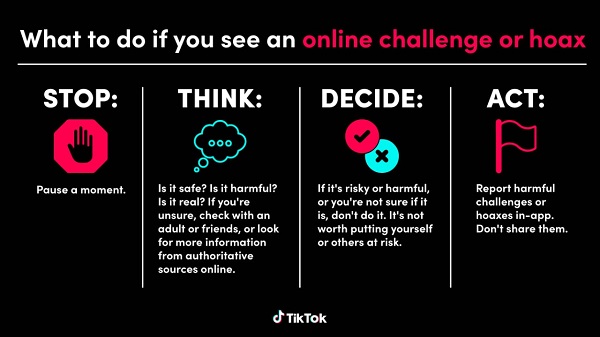

Viral challenges can appeal to teens’ desire for approval from friends and peers because they result in likes and views. But the way teens assess whether challenges are safe has flaws. They tend to just watch more videos or ask friends for advice. Parents and teachers, meanwhile, have often been hesitant to talk about challenges and hoaxes for fear of prompting more interest in them.

The study found that most teens aren’t participating in the most dangerous challenges. Only 21% of global teens were participating in challenges, and only 2% participated in those that are considered risky. An even smaller 0.3% have taken part in a challenge they considered to be “really dangerous.” Most thought participation in challenges was either neutral (54%) or positive (34%), not negative (11%). And 64% said participation had a positive impact on their friendships and relationships.

TikTok removing alarmist warnings about potentially harmful online challenges and hoaxes

As part of its policies updates, in response to the research, TikTok said the technology it uses to alert its safety teams to increases in prohibited content linked to hashtags will now be extended to also capture potentially dangerous behavior that attempts to hijack or piggyback on an otherwise common hashtag.

TikTok said it would also improve the language used in its content warning labels to encourage users to visit its Safety Centre for more information and add new materials to that space aimed at parents and careers unsure on how to discuss the subject with children.

The aim of the project was to better understand young people’s engagement with potentially harmful challenges and hoaxes. While not unique to any one platform, the effects and concerns are felt by all and we wanted to learn how we might develop even more effective responses as we work to better support teens, parents, and educators, adding that the company wanted to help contribute to a wider understanding of this area. For our part, we know the actions we’re taking now are just some of the important work that needs to be done across our industry and we will continue to explore and implement additional measures on behalf of our community.

Alexandra Evans – TikTok’s Head of Safety Public Policy for Europe

TikTok has made a number of updates to its platform over the last year, particularly in areas around safety for younger users. The app has increased the default privacy settings and reduced access to direct messaging features for younger users, as well as expanding its Safety Centre and online resources to offer more information and guidance.

In September, research carried out by anti-abuse campaign group Hope not Hate suggested that social media companies are not trusted by the public to deal with the problem of online abuse and hateful content and that there was majority support among the public for increased regulation.

TikTok additionally said it will look for sudden increases in violating content, including potentially dangerous behavior, linked to hashtags. TikTok says it will now better moderate content something users thought the company was doing anyway.